👋 Hey friends,

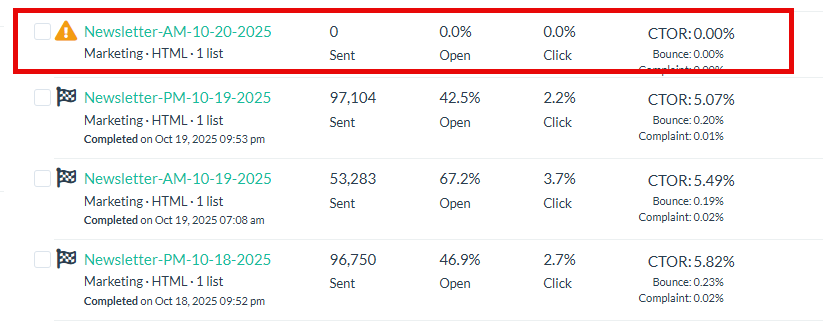

If your sends looked off Monday morning — low delivery, strange delays, weird revenue gaps — you weren’t imagining it.

A major Amazon Web Services (AWS) outage in US-EAST-1 (N. Virginia) triggered a cascade of DNS and networking failures that knocked out dozens of tools, from ESPs and ad servers to ecommerce integrations.

By Monday evening, AWS said everything was restored. But for many senders, the damage was already done.

Before we dive deep into the outage fallout, a quick message from Audience Bridge…

Smart Feed

Smart Feed isn’t built on cold traffic or wishful targeting.

It delivers signal-verified subscribers who are already primed to engage with content like yours — and it scales without sacrificing quality.

That’s why top newsletter operators are using it to grow smarter, not just bigger.

…Let’s break down. 👇

What Actually Happened

Around 3 a.m. ET on Monday, Oct 20, AWS’s internal health monitoring system glitched — corrupting how Network Load Balancers and DNS resolution handled requests.

Translation: anything running on Lambda, SQS, EC2, or their APIs stopped behaving normally.

If your ESP, webhook, or automation stack sits inside that region (and most do), you felt it.

Affected platforms included:

Outlook

Amazon SES

Klaviyo

Iterable

Liveintent

Mailchimp

Active Campaign

And hundreds more that quietly rely on the same AWS backbone.

It’s a reminder: when half the internet uses the same infrastructure, a single point of failure can knock everyone offline.

How It Hit Email Senders

The outage hit during prime time for deliverability — the pre-dawn send window most newsletters use for morning inbox placement.

Amazon SES sends were not received.

Klaviyo reported full provider outages from 2:50–5:30 a.m. ET. Campaigns queued up, automations froze, and attribution data lagged.

ActiveCampaign, Iterable, and Mailchimp didn’t post official updates but users across forums and Slack groups reported failed sends, blocked webhooks, and login errors.

👉 In short: some “Monday morning” sends never happened.

Others went out hours late — missing the engagement window entirely.

That lag doesn’t just mess with metrics; it affects reputation.

Late bursts can trigger throttling, false complaint spikes, and deliverability dips across the board.

The Monetization Fallout

The pain didn’t stop at deliverability.

When AWS hiccups, ad tech collapses right behind it.

Ad calls timed out across exchanges.

Newsletter ad slots loaded blank.

Tracking pixels failed.

DNS and API interruptions broke frequency caps and analytics pipelines.

Fewer rendered impressions = lower CPMs.

And your Oct 20 reporting? Probably garbage.

If you saw lower ad fill, underreported clicks, or missing revenue — this is why.

Why One AWS Region Can Break the Internet

US-EAST-1 is the oldest and most interconnected AWS region — basically the beating heart of the internet.

Thousands of third-party control planes, DNS resolvers, and legacy systems still anchor there.

When its health layer fails, dependent systems self-throttle or error out — the digital equivalent of a multi-car pileup on the cloud highway.

Efficiency meets fragility: centralization is cheap… until it’s not.

What to Do Next Time

1️⃣ Re-send critical campaigns

Pull segments of non-opens or undelivered contacts from Oct 20’s early window.

Use a fresh subject line and stagger your resend to avoid spikes.

2️⃣ Rebuild broken automations

Triggered flows (like Shopify or Stripe webhooks) may have silently failed.

Use time-based fallbacks to fill the gaps.

3️⃣ Reconcile attribution and revenue

Expect delayed unsub, conversion, and click data.

Re-run Oct 20–21 reports once your ESP finishes syncing logs.

4️⃣ Fix ad fallbacks

If you saw blank ads, set up house-ad defaults and cache logic for next time.

Check UTM and tracking tags — your analytics are likely off.

How to Prepare for the Next One

This won’t be the last AWS meltdown. So plan for resilience now.

Go multi-region.

If your ESP or infrastructure provider supports it, spread delivery across multiple regions — even if it costs more.

Lucky for us we have 4 newsletters utilizing Amazon SES but we have them all spread out across different regions (us-east-1, us-east-2, us-west-1, and us-west-2) which helped us only lose 25% of revenue during the outage versus all of it.

Use retry logic & idempotency keys

All webhooks and API calls should retry gracefully after timeouts.

Keep a warm backup ESP

Maintain a separate IP/domain setup for failsafe sending (alerts, confirmations, or key sends).

Add ad-tech timeouts & cached fallbacks

5–8-second limits can prevent blank ad slots in your newsletters.

Monitor externally

Use uptime tools that don’t rely on the same AWS region you’re hosted on.

Document your runbooks

Who pauses flows? Who triggers resends? What’s the comms plan?

Run quarterly test drills.

✅ Final Thought

Monday’s AWS outage was more than a blip — it was a reality check.

A few hours of downtime in one region disrupted deliverability, broke automations, skewed analytics, and wiped out ad revenue across the ecosystem.

If you’re scaling email, you have to build for disruption.

That means:

Redundancy in your sending stack.

Visibility in your monitoring.

Graceful fallback systems when the cloud goes dark.

Because AWS won’t call to warn you — it’ll just pull the plug.

The lights are back on for now,

Chris Miquel

PS: Cloud outages aren’t an “if.” They’re a “when.” The operators who win long term are the ones who plan for failure — and keep delivering anyway.

BEFORE YOU GO

How did you like today's newsletter?

Better Inbox Placement Starts Here

If your emails aren’t landing in the inbox, they’re not doing their job. I’ve seen too many brands struggle with deliverability issues without knowing why.

The truth is, a few key optimizations can make all the difference in getting your emails seen, opened, and clicked.